Member-only story

Dropout Layer Explained in the Context of CNN

What are dropout layers and why are they applied after dense layers?

The Dropout Layer is a regularization technique used in CNN (and other deep learning models) to help prevent overfitting. Overfitting occurs when a model demonstrates high performance on the training data but struggles to generalize well to unseen data.

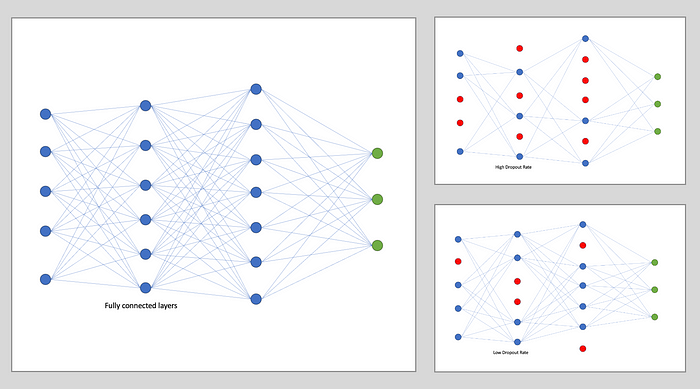

One way to prevent overfitting is by building ensembles of neural networks with different architectures. However, this approach is computationally costly and would require a lot of programming work. Another method to simulate a diverse range of network architectures with a single model is by incorporating ‘dropout’, which involves randomly disabling nodes during the training phase.

The dropout layer functions by randomly deactivating a portion of input units during each training update. This implies that during forward propagation, certain neurons in the network are ‘dropped out’ or temporarily disregard, along with their associated connections, based on a specific probability. The remaining neurons were then rescaled by a factor of (1/(1-droped_rate)) to account for the dropped neurons during training.

By excluding neurons, the model becomes less dependent on specific ones and encourages the network to acquire more robust and generalizable representations. Dropout mitigates the co-adaptation of neurons and diminishes the likelihood of overfitting by introducing noise or randomness into the network.

Why use dropout after dense layers?

Dropout can be applied to any layer that has learnable parameters and can benefit from regularization. However, the advantage of using dropout after the dense layers is that it helps regularize the connections between the neurons in the layers. Dense layers often have a large number of parameters and can easily overfit the training data. By applying dropout to the dense layers, we encourage the network to learn more robust representations and reduce the reliance on specific neurons. During inference, or testing, dropout is not applied, and the network uses all the neurons.